Choreographed Evasion

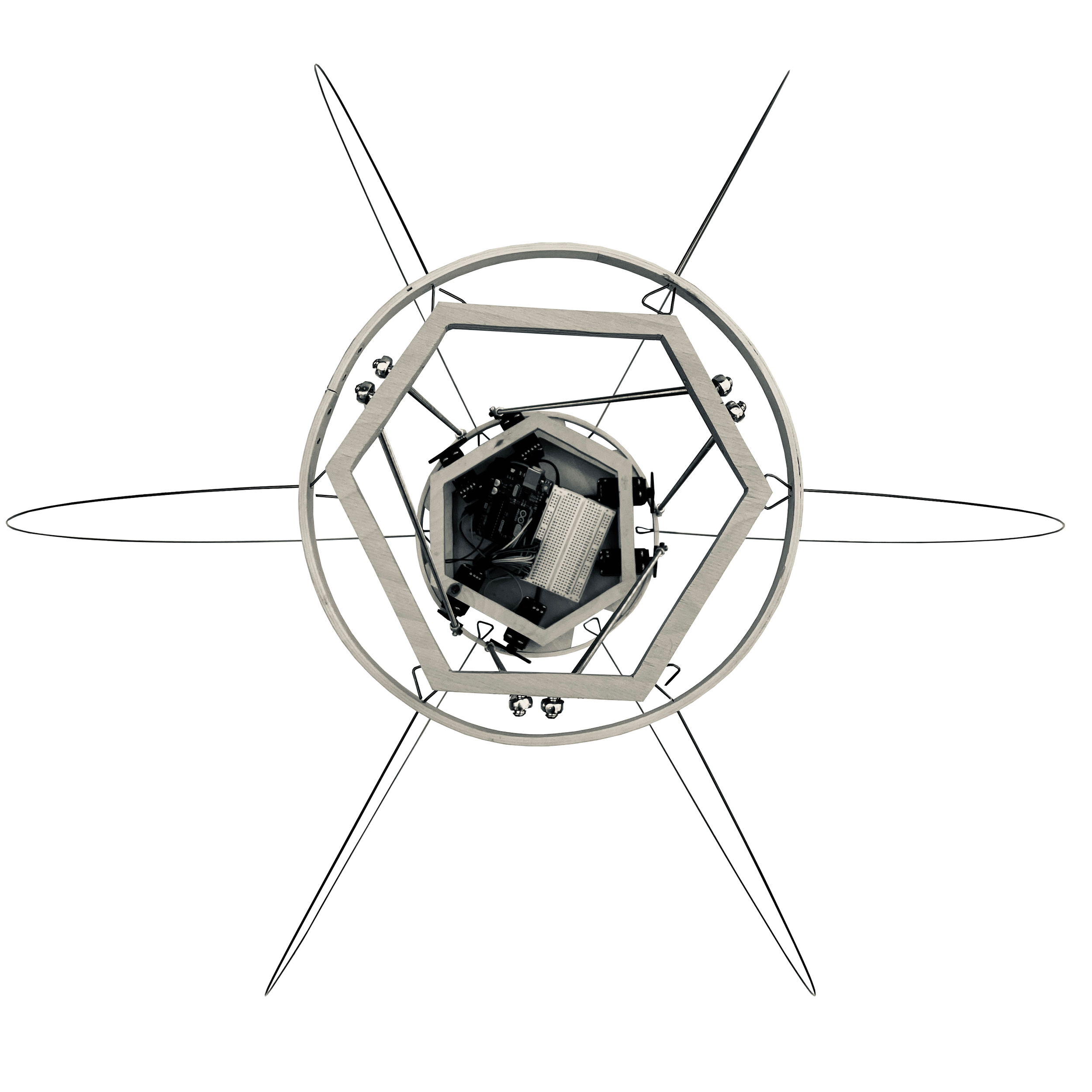

The agenda of this piece comes into play in how it entices people to interact with it. When seeing this object, one feels the need to test the suspension of the upper disk and push down on it. This is because the object invites weight, it is asking to be tested. Though it invites weight, it cannot support it. As weight increases, the vertical balance of the piece wavers. Greater weight causes the wires to shift, sending the upper disk off the vertical axis. Furthermore, the object has its own agency in the movement, vibration, and wobble that ensues at the slightest touch.

Inviting interaction but the inability to support weight led to an evasive agenda. One where the object avoids contact from the world at all costs. If and when approached, the top disk is triggered and moved by the calculated movement/arrangement of the Stewart Platform’s upper deck.

Embodied Computation

Taught By Axel Kilian

Spring 2020

Actuation:

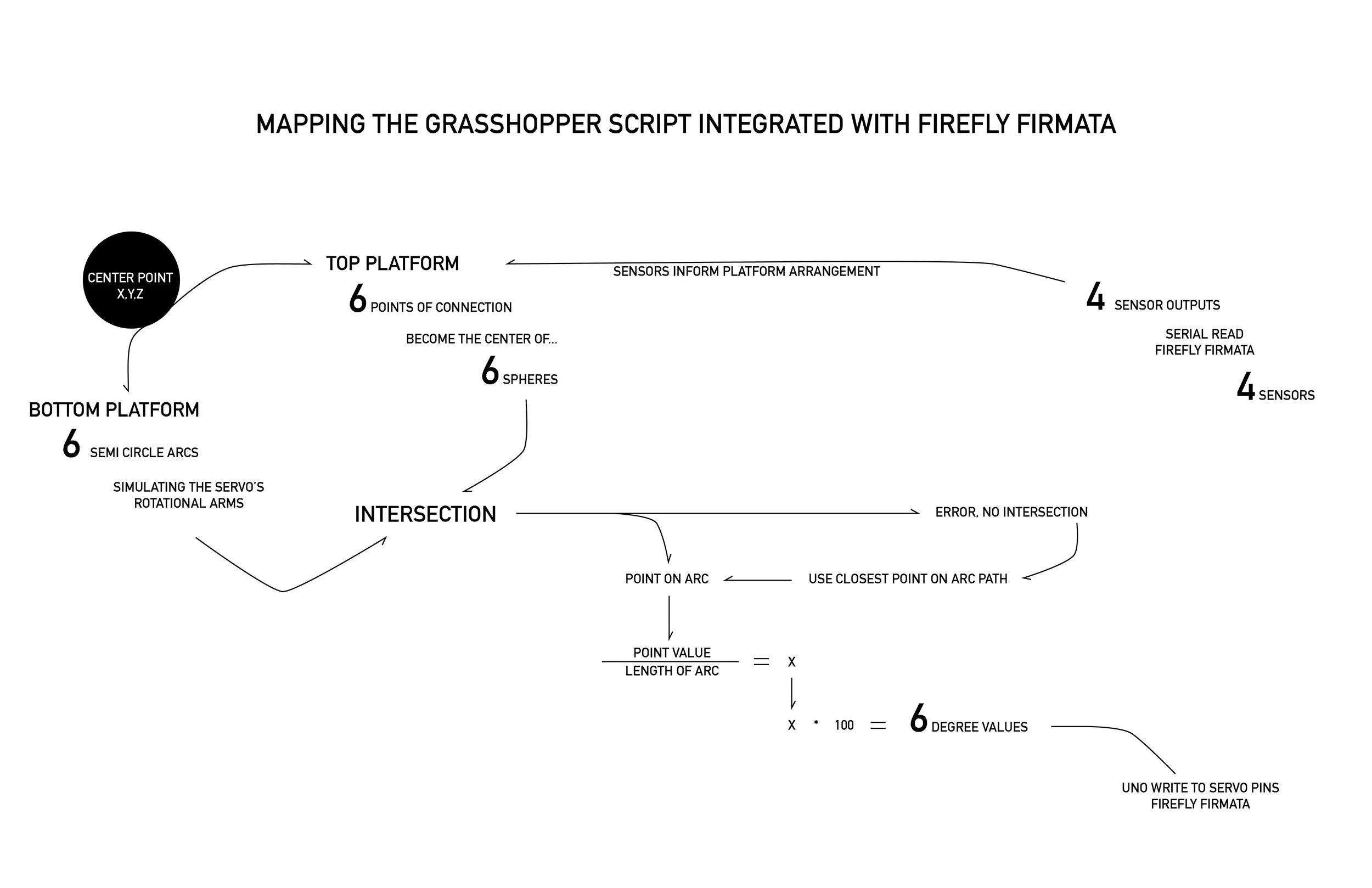

A Stewart Platform is used because it allows for six degrees of freedom, and can achieve the complex motions needed to mimic animal-like behavior and thus actuate this object in a manner that satiates its agenda. Having a static base and the upper disc controlled by a Stewart Platform, the object can lean away when approached. The Stewart Platform allowed the complexity of human-object interactions to be realized, as well as the object’s behavioral potentials. To give the object more autonomy, alternative behaviors and gestures were simulated by mapping continuous curves in Rhino for the upper platform to trace. The next step was to map these different movements or behaviors to different sensor inputs and conditional states.

Sensing:

The sensors implemented are 4 ultrasonic sensors at the base of the object, each pointing in a different direction so that the object can perceive an approach from any direction. This was key in order for the object to perceive a directional approach and respond with a corresponding movement or lean in the opposite direction. The sensors not only capture the directional presence of people, but the proximity of their presence and the duration of their presence. Using this information, the object was programmed to have more dramatic movements as people get closer. The challenge came when using those sensor readings to determine the thresholds that would trigger state changes in the object, as well as developing object autonomy via sensor-based decision making. Additionally, the implementation of four sensors and the possibility of receiving sensor inputs from all directions meant that a hierarchy needed to be established.